Cryptography in the Quantum Era

The following section aims to explore the implications that recent developments in the field of quantum computing may have on Cryptography, which has always been a pivotal element in the framework of Telsy’s expertise.

The rubric, edited by Telsy’s Cryptography Research Group, treats the topic mainly from two perspectives. The first section is devoted to a description of the fundamentals of quantum computing and the cryptographic implications of it while the next focuses on a possible response to this threat, namely Post-Quantum Cryptography (PQC).

While classical physics is associated with the science that deals with the study of natural phenomena at the macroscopic level, quantum physics identifies the science that deals with understanding microscopic phenomena.

Nobel in physics Richard Feynman was the first to propose a computational model based on these microscopic phenomena, giving rise to quantum computing.

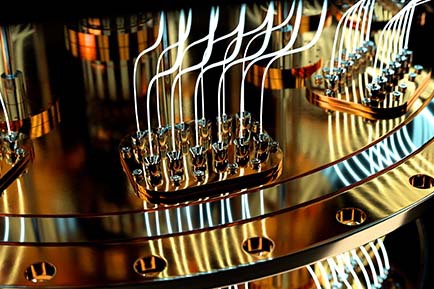

A quantum computer is structurally different from a classical computer.

The major distinguishing element is identified in the different units of information adopted by a quantum processor: the qubit, or quantum bit.

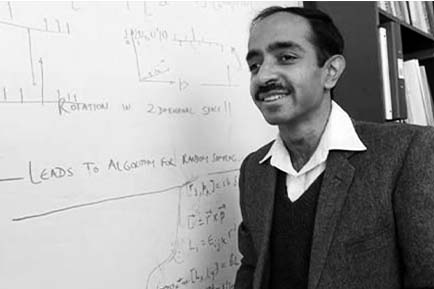

In the 1996 article “A Fast Quantum Mechanical Algorithm for Database Search,” Indian-American computer scientist Lov K. Grover highlighted the potential of quantum computing in the area of search algorithms.

This contribution has a not insignificant impact on cryptography in use today.

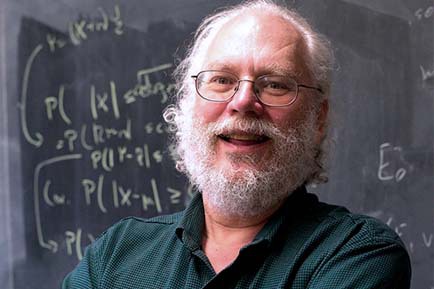

In his 1995 paper “Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer,” U.S. computer scientist Peter Shor described a quantum algorithm capable of breaking the RSA scheme and the Diffie-Hellman protocol, the foundations of the most secure communication systems in use today.

To understand the challenges associated with the realization of a quantum computer, it is necessary to distinguish physical qubits and logical qubits. Within a quantum processor, each physical qubit is an unstable entity corresponding to the quantum state of a microscopic phenomenon.

Multiple physical qubits are made to interact to stabilize a single quantum state called a logical qubit.

Post-Quantum Cryptography (PQC) is a classical response to the advent of quantum computing.

It deals with the design of public-key cryptographic schemes that can be implemented on classical processors and are also resistant to quantum attacks.

NIST’s evaluation in the Post-Quantum cryptography area has focused on cryptographic key exchange mechanisms with properties different from those guaranteed by the (pre-quantum) Diffie Hellman paradigm, called Key Encapsulation Mechanisms (KEM).

This makes the transition to the post-quantum era not without obstacles.

Digital signature schemes guarantee information integrity, authenticity, and non-repudiation.

Those most widely used to date (EdDSA, ECDSA, RSA) are vulnerable to attack by a quantum computer, which is why NIST has initiated a process to standardize Post-Quantum signature algorithms.

One-way functions are mathematical functions that are easy to compute but computationally difficult to invert, and are now the basis for building secure asymmetric cryptographic schemes, from RSA and Diffie-Hellman to Post-quantum cryptographic algorithms.

One-way functions are mathematical functions that are easy to compute but computationally difficult to invert, and are now the basis for building secure asymmetric cryptographic schemes, from RSA and Diffie-Hellman to Post-quantum cryptographic algorithms.

Lattices are mathematical structures on which computational problems, considered difficult even for a quantum computer, can be defined, and on which the security of many post-quantum cryptographic schemes forming part of the NIST standardization process is based.

Lattices are mathematical structures on which computational problems, considered difficult even for a quantum computer, can be defined, and on which the security of many post-quantum cryptographic schemes forming part of the NIST standardization process is based.

Learning With Errors is an algebraic problem based on the idea of making a system of random equations hard by adding noise to it. Considered a hard problem to solve, it is now the basis for the security of some of the NIST-standardized schemes belonging to latex-based cryptography.

Learning With Errors is an algebraic problem based on the idea of making a system of random equations hard by adding noise to it. Considered a hard problem to solve, it is now the basis for the security of some of the NIST-standardized schemes belonging to latex-based cryptography.

In the context of NIST’s standardization process for identifying and analyzing Post-Quantum Cryptography (PQC) solutions, the first crucial outcome is the key encapsulation mechanism (KEM) CRYSTALS-Kyber selection.

Kyber is a public-key cryptographic scheme that allows two parties to derive a common secret to protect the exchange of information.

The first of the Post-Quantum digital signature schemes selected by NIST for standardization is CRYSTALS-Dilithium, based on the construction called “Fiat-Shamir with Aborts” introduced in 2009 by Ukrainian-U.S. mathematician Vadim Lyubashevsky.

The first of the Post-Quantum digital signature schemes selected by NIST for standardization is CRYSTALS-Dilithium, based on the construction called “Fiat-Shamir with Aborts” introduced in 2009 by Ukrainian-U.S. mathematician Vadim Lyubashevsky.

The second of the Post-Quantum digital signature schemes selected by NIST for standardization is Falcon, based on the GPV framework for constructing hash-and-sign signatures on lattices introduced in 2008 by Gentry, Peikert, and Vaikuntanathan.

With the completion of the third round of the post-quantum competition and the subsequent selection of the winning algorithms, NIST announced a fourth round to differentiate the security assumptions of the selected schemes.

Among the remaining schemes, only those related to key negotiation were deemed eligible. NIST then found it necessary to initiate a new process focused on the selection of digital signatures.

The codes originated in the context of telecommunications to detect and correct errors that may occur on a noisy channel, and computational problems difficult to solve even for a quantum computer, the basis of some of the major post-quantum cryptography schemes can be built on them today.

The Syndrome Decoding Problem (SD) is the mathematical problem underlying the McEliece (1978) and Niederreiter (1986) cryptosystems that gave rise to the branch of post-quantum cryptography called code-based cryptography.

The Syndrome Decoding Problem (SD) is the mathematical problem underlying the McEliece (1978) and Niederreiter (1986) cryptosystems that gave rise to the branch of post-quantum cryptography called code-based cryptography.

The most conservative of the post-quantum encapsulation schemes analyzed by NIST is the Classic McEliece, based on Neiderreiter’s (1986) scheme defined on Goppa codes.

The most conservative of the post-quantum encapsulation schemes analyzed by NIST is the Classic McEliece, based on Neiderreiter’s (1986) scheme defined on Goppa codes.

The cryptographic hash functions are involved in most communication protocols used today. Upon their properties, it is possible to construct a post-quantum digital signature algorithm.

The cryptographic hash functions are involved in most communication protocols used today. Upon their properties, it is possible to construct a post-quantum digital signature algorithm.

XMSS is a first example of a digital signature algorithm entirely based on cryptographic hash functions that has been standardized by NIST.

XMSS is a first example of a digital signature algorithm entirely based on cryptographic hash functions that has been standardized by NIST.